Author: MirandaYang

Since its introduction, GAN has attracted a lot of attention, especially in the field of computer vision. "In-depth interpretation: GAN model and its progress in 2016" [1] provides a detailed overview of the advancements in GAN during that year, making it a great resource for beginners who are new to GANs. This article focuses on the application of GAN in NLP, acting more like a paper summary or notes rather than an introductory guide. It does not cover the basics of GAN, so if you're unfamiliar with the fundamentals, I recommend checking out [1]. I’m a bit lazy, so I won’t go into the details here (J). Since it's been a while since I wrote in Chinese, please bear with me and let me know if there are any inaccuracies.

Although GAN has achieved impressive results in image generation, it hasn't made the same breakthroughs in natural language processing (NLP) tasks. The main reason is that the original GAN was designed for real-valued data, not discrete data like text. Dr. Ian Goodfellow, the creator of GAN, once said, “GANs are not currently applied to natural language processing (NLP). They were originally defined in the real domain, where generators create synthetic data, and discriminators evaluate it. The gradient from the discriminator tells the generator how to make the output more realistic. However, this only works well with continuous data. For example, if a pixel value is 1.0, it can be slightly adjusted to 1.0001. But if the output is a word like 'penguin', you can’t just change it to 'penguin + 0.001' because such a word doesn’t exist. Since NLP relies on discrete elements—words, letters, or syllables—it’s very challenging to apply GANs directly.†To date, no strong learning algorithm has successfully addressed this issue.

When generating text, GAN models the entire sequence. However, evaluating the quality of a partially generated sequence is difficult. Another challenge arises from using RNNs (commonly used in text generation). When generating text from latent codes, errors accumulate exponentially as the sentence length increases. The beginning might look good, but the quality deteriorates quickly. Additionally, controlling the length of the generated text is also problematic due to the randomness of the input.

Below, I will discuss some recent papers that explore applying GAN to NLP:

1. **Generating Text via Adversarial Training**

Paper link: http://people.duke.edu/~yz196/pdf/textgan/paper.pdf

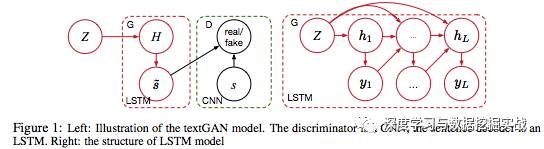

This paper from the 2016 NIPS GAN Workshop attempts to apply GAN theory to text generation. The approach is relatively simple: it uses an LSTM as the generator and applies a smooth approximation to the output. The structure is shown below:

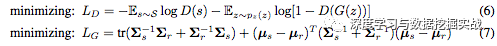

The objective function differs from standard GAN. Instead, it uses feature matching. The optimization process includes two steps:

Equation (6) is the standard GAN loss, and equation (7) is the feature matching loss.

The initialization is interesting, particularly the pre-training of the discriminator. It uses both original sentences and modified ones (by swapping two words) for training. This helps the discriminator distinguish between real and fake texts.

The generator is updated five times for every one update of the discriminator, which is the opposite of the standard GAN setup. This is because LSTM has more parameters and is harder to train.

However, the LSTM decoder suffers from exposure bias, where the model gradually replaces actual outputs with predicted ones during training.

2. **SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient**

Paper link: https://arxiv.org/pdf/1609.05473.pdf

Source: https://github.com/LantaoYu/SeqGAN

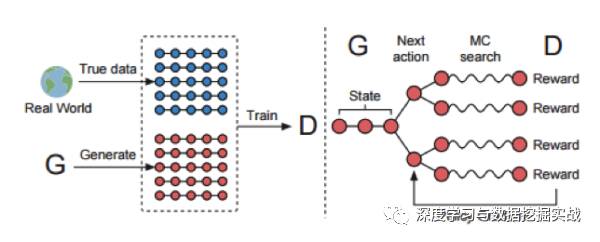

This paper treats sequence generation as a sequential decision-making problem. As shown below:

In step 1, the discriminator D distinguishes between real and fake samples. In step 2, the generator G is updated using policy gradients based on the discriminator's feedback.

The reward is approximated using Monte Carlo search, where the generator explores N paths and averages the rewards from the discriminator.

The policy gradient method is used to update the generator. The final result is derived through the policy gradient theory.

The experimental results include synthetic data and real-world examples like Chinese poetry and Obama speeches. BLEU scores are used for evaluation.

3. **Adversarial Learning for Neural Dialogue Generation**

Paper link: https://arxiv.org/pdf/1701.06547.pdf

Source: https://github.com/jiweil/Neural-Dialogue-Generation

This paper introduces adversarial training for open-domain dialogue generation. It uses a seq2seq model as the generator and a historical encoder as the discriminator. The reward mechanism is similar to SeqGAN, but it also incorporates human-generated responses to improve stability.

One key difference is the way rewards are calculated for partially generated sequences. Instead of Monte Carlo search, it randomly samples from subsequences to avoid overfitting.

4. **GANs for sequence of discrete elements with the Gumbel-softmax distribution**

Paper link: https://arxiv.org/pdf/1611.04051.pdf

This paper proposes a method to handle discrete data by using the Gumbel-softmax trick. It allows differentiation of the sampling process, making it easier to train GANs on text. The experiments are limited, showing only context-free grammar generation.

5. **Connecting generative adversarial network and actor-critic methods**

Paper link: https://arxiv.org/pdf/1610.01945.pdf

This paper draws parallels between GAN and actor-critic methods in reinforcement learning. Both involve a generator (actor) and a critic (discriminator), and they share similar goals. This connection could inspire new approaches to sequence prediction and other NLP tasks.

Overall, these papers show the potential and challenges of applying GANs to NLP. While the field is still evolving, the techniques explored offer valuable insights for future research.

[1] In-depth interpretation: GAN model and its progress in 2016

[2] Actor-Critic Algorithms

[3] An actor-critic algorithm for sequence prediction

Spdt Momentary Rocker Switch,16 Amp Single Pole Rocker Switch,Single Pole Double Rocker Switch

Yang Guang Auli Electronic Appliances Co., Ltd. , https://www.ygpowerstrips.com