2016 is a memorable year for the field of artificial intelligence. Not only do computers "learn" more and faster, but we also know how to improve computer systems. Everything is on the right track, so we are witnessing a major advancement that has never been seen before: we have a program that can tell stories with pictures, a driverless car, and even a program that can create art. If you want to know more about progress in 2016, be sure to read this article. AI technology has gradually become the core of many technologies, so understanding some common terms and working principles has become an important matter.

What is artificial intelligence?

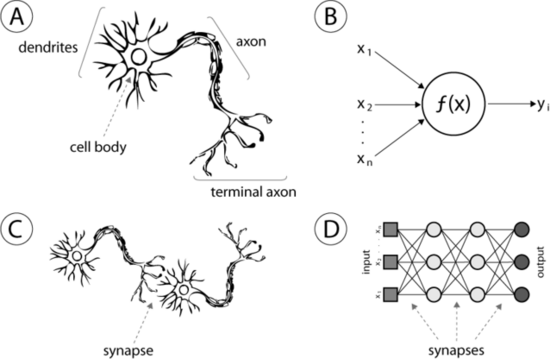

Many advances in artificial intelligence are new statistical models, the vast majority of which come from a technology called arTIficial neural networks, or ANN. This technique mimics the structure of the human brain very roughly. It is worth noting that artificial neural networks and neural networks are different. Many people have omitted the artificial word in the "artificial neural network" for convenience. This is inaccurate because the word "artificial" is used to distinguish it from the neural network in computational neurobiology. The following are real neurons and synapses.

There are computational units called "neurons" in our ANN. These artificial neurons are connected by "synapses", where "synapses" refer to weight values. This means that given a number, a neuron will perform some kind of calculation (such as a sigmoid function), and the result will be multiplied by a weight. If your neural network has only one layer, the weighted result is the output of the neural network. Alternatively, you can configure multi-layered neurons, which is the basic concept of deep learning.

Where did they originate?

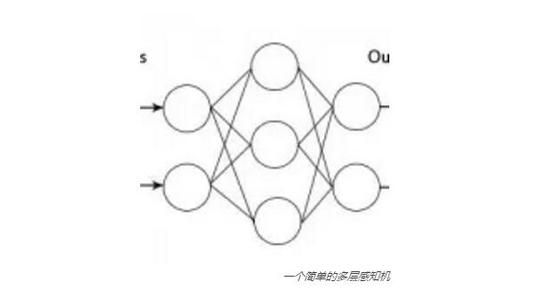

Artificial neural networks are not a new concept. In fact, their past names are not called neural networks, and their earliest state is completely different from what we see today. In the 1960s, we called it the perceptron, which consisted of McCulloch-Pitts neurons. We even have a bias perception machine. Finally, people began to create multi-layer perceptrons, the artificial neural networks we usually hear today.

If neural networks began in the 1960s, why did they become popular until today? This is a long story. In short, there are some reasons that hinder the development of ANN. For example, our past computing power is not enough, there is not enough data to train these models. Using neural networks can be very uncomfortable because they seem to be very casual. But every factor mentioned above is changing. Today, our computers are getting faster and more powerful, and because of the development of the Internet, we have a variety of data to use.

How do they work?

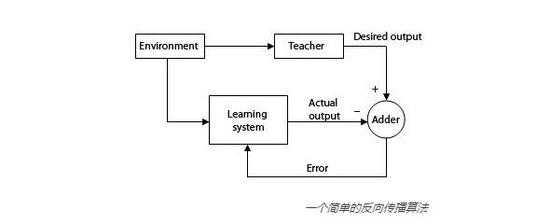

I mentioned the neurons and synapses that run the calculations above. You might ask: "How do they learn what kind of calculations to perform?" Essentially, the answer is that we need to ask them a lot of questions and provide them with answers. This is called supervised learning. With enough "question-answer" cases, the calculations and weights stored in each neuron and synapse can be slowly adjusted. Usually, this is done through a process called backpropagaTIon.

Imagine that you saw a lamppost while walking along the sidewalk, but you have never seen it before, so you may accidentally hit it and scream. Next time, you will pass by a few inches beside the lamppost, your shoulders may touch it, and you will "snap" again. Until you see this lamppost for the third time, you will avoid it far away to make sure you don't touch it at all. But at this time, the accident happened. You ran into a mailbox while avoiding the lamp post, but you have never seen this mailbox before, and you ran into it directly - the whole process of "the tragedy of the lamppost" reappeared. . This example is somewhat oversimplified, but it is actually how backpropagation works. An artificial neural network is given multiple similar cases, and then it tries to get the same answer as the case answer. When its output is wrong, this error is recalculated and the value of each neuron and synapse is propagated back through the artificial neural network for the next calculation. This process requires a lot of cases. For practical applications, the number of required cases may reach millions.

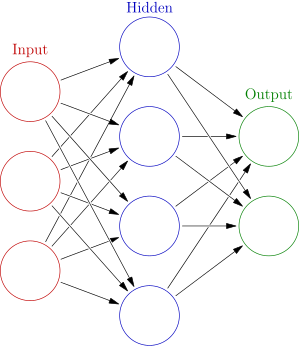

Now that we understand the artificial neural networks and how they work, we might think of another question: How do we know how many neurons we need? Why is the word "multilayer" in bold before? In fact, each layer of artificial neural network is a collection of neurons. We have an input layer when entering data for the ANN, and there are many hidden layers, which is where the magic was born. Finally, we have an output layer, and the final calculation results of the ANN are placed here for us to use.

A hierarchy is itself a collection of neurons. In the age of multi-layer perceptrons, we initially thought that an input layer, a hidden layer, and an output layer would suffice. It worked at that time. Enter a few numbers and you only need a set of calculations to get the results. If the calculation result of the ANN is not correct, you can add more neurons to the hidden layer. Finally, we finally understand that this is actually creating a linear map for each input and output. In other words, we understand that a particular input must correspond to a particular output. We can only deal with input values ​​we have seen before, without any flexibility. This is definitely not what we want.

Today, deep learning brings us more hidden layers, which is one of the reasons why we have a better ANN now, because we need hundreds of nodes and at least dozens of levels, which brings much A large number of variables tracked in real time. Advances in parallel programs have also enabled us to run larger ANN batch calculations. Our artificial neural network is becoming so large that we can no longer run an iteration over the entire network. We need to perform batch calculations on sub-collections across the network, and back-propagation can only be applied after one iteration.

L01-N Series Push Wire Connectors

Reflow Soldering Wire Connectors,Copper Clamping Wire Connectors,Pcb Wire Connectors,Smd Wire Connectors

Jiangmen Krealux Electrical Appliances Co.,Ltd. , https://www.krealux-online.com